Variable Refresh Rates – G-sync and FreeSync

Original article 5 June 2015. Last updated 18 April 2019

Introduction

If you’re a gamer, it’s always been a challenge to balance the performance of your graphics card with your monitor. For many years people have had to live with issues like “tearing”, where the image on the screen distorts and “tears” in places creating a distracting and unwanted experience. Tearing is a problem caused when a frame rate is out of sync with the refresh rate of the display. The only real option historically has been to use a feature called Vsync to bring both in sync with one another, but not without introducing some issues of its own at the same time which we will explain in a moment. Back in 2014 – 15 we saw a step change in how refresh rates are handled between graphics card and monitor and the arrival of “variable refresh rate” technologies. NVIDIA and AMD, the two major graphics card manufacturers, each have their own approach to making this work which we will look at in this article. We are not going to go in to mega detail about the graphics card side of things here, there’s plenty of material online about that. We instead want to focus on the monitor side of things a bit more as is our interest at TFTCentral.

Frame Rates and Vsync

As an introduction, monitors typically operate at a fixed refresh rate, whether that is common refresh rates like 60Hz, 120Hz, 144Hz or above. When running graphically intense content like games, the frame rate will fluctuate somewhat and this poses a potential issue to the user. The frame rate you can achieve from your system very much depends on the power of your graphics card and PC in general, along with how demanding the content is itself. This can be impacted by the resolution of your display and the game detail and enhancement settings amongst other things. The higher you push your settings, the more demand there will be on your system and the harder it might be to achieve the desired frame rate. This is where an issue called tearing can start to be a problem as the frame rate output from your graphics card can’t keep up with the fixed refresh rate of your monitor. Tearing is a distracting image artefact where the image becomes disjointed or separated in places, causing issues for gamers and an unwanted visual experience.

Above: A brief example performance capture averaging 60 FPS but showing very strong stuttering.

There were traditionally two main options available for how frames are passed from the graphics card to the monitor using a feature called Vsync, with settings simply for on or off.

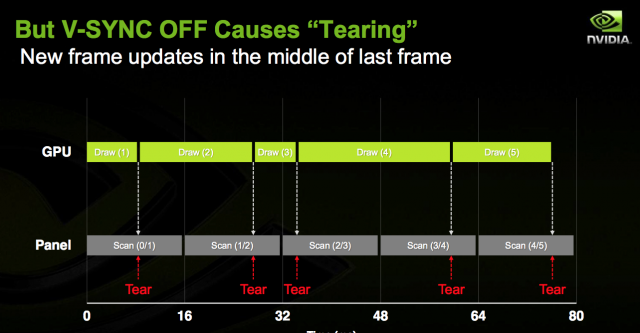

At the most basic level ‘VSync OFF’ allows the GPU to send frames to the monitor as soon as they have been processed, irrespective of whether the monitor has finished its refresh and is ready to move onto the next frame. This allows you to run at higher frame rates than the refresh rate of your monitor but can lead to a lot of problems. When the frame rate of the game and refresh rate of the monitor are different, things become unsynchronised. This lack of synchronisation coupled with the nature of monitor refreshes (typically from top to bottom) causes the monitor to display a different frame towards the top of the screen vs. the bottom. This results in distinctive ‘tearing’ on the monitor that really bothers some users. Even on a 120Hz or 144Hz monitor, where some users claim that there is no tearing, the tearing is still there. It is generally less noticeable but it is definitely still there. Tearing can become particularly noticeable during faster horizontal motion (e.g. turning, panning, strafing), especially at lower refresh rates.

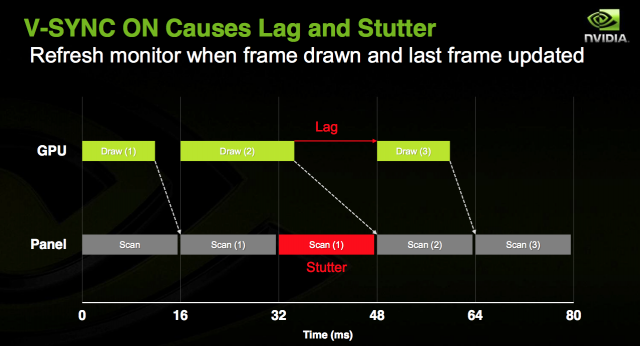

The solution to this tearing problem for many years was the ‘VSync ON’ option which essentially forces the GPU to hold a frame until the monitor is ready to display it, as it has finished displaying the previous frame. It also locks the frame rate to a maximum equal to the monitor’s refresh rate. Whilst this eliminates tearing, it also increases lag as there is an inherent delay before frames are sent to the monitor. On a 120Hz monitor the lag penalty is half that of a 60Hz monitor and on a 144Hz monitor is even lower. It is still there, though, and some users feel it disconnects them from game play somewhat. When the frame rate drops below the refresh rate of the monitor this disconnected feeling increases to a level that will bother a large number of users. Some frames will be processed by the GPU more slowly than the monitor is able to display them. In other words the monitor is ready to move onto a new frame before the GPU is ready to send it. So instead of displaying a new frame the monitor displays the previous frame again, resulting in stutter. Stuttering can be a major problem when using the Vsync on option to reduce tearing.

During Vsync ON operation, there can also sometimes be a sudden slow down in frame rates when the GPU has to work harder. This creates situations where the frame rate suddenly halves, such as 60 frames per second slowing down to 30 frames per second. During Vsync ON, if your graphics card is not running flat-out, these frame rate transitions can be very jarring. These sudden changes to frame rates creates sudden changes in lag, and this can disrupt game play, especially in first-person shooters.

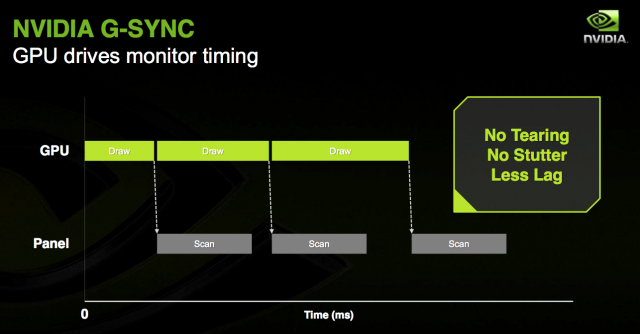

Variable Refresh Rate – Adaptive-Sync

To overcome these limitations with Vsync, both NVIDIA and AMD have introduced new technologies based on a “variable refresh rate” (VRR) principle. These technologies can be integrated into monitors allowing them to dynamically alter the monitor refresh rate depending on the graphics card output and frame rate. The frame rate of the monitor is still limited in much the same way it is without a variable refresh rate technology, but it adjusts dynamically to a refresh rate to match the frame rate of the game. By doing this the monitor refresh rate is perfectly synchronised with the GPU. You don’t get the screen tearing or visual latency of having Vsync disabled, nor do you get the stuttering or input lag associates with using Vsync. You can get the benefit of higher frame rates from Vsync off but without the tearing, and without the lag and stuttering caused if you switch to Vsync On.

NVIDIA G-sync

NVIDIA were first to launch capability for variable refresh rates with their G-sync technology. G-sync was launched mid 2014 with the first screen we tested being the Asus ROG Swift PG278Q. It’s been used in many gaming screens since with a lot of success.

The G-sync Module

Traditionally NVIDIA G-sync required a proprietary “G-sync module” hardware chip to be added to the monitor, in place of a traditional scaler chip. This allows the screen to communicate with the graphics card to control the variable refresh rate and gives NVIDIA a level of control over the quality of the screens produced under their G-sync banner. As NVIDIA say on their website: “Every G-sync desktop monitor and laptop display goes through rigorous testing for consistent quality and maximum performance that’s optimized for the GeForce GTX gaming platform”.

This does however add an additional cost to production and therefore the retail price of these hardware G-sync displays, often in the realms of £100 – 200 GBP compared with alternative non G-sync models. Even higher when you consider the new v2 module which is often £400 – 600 additional cost. This is often criticized by consumers who dislike having to pay the “G-sync Tax” to get a screen that can support variable refresh rates from their NVIDIA graphics card. There have been some recent changes to this in 2019 which we will discuss later, in relation to allowing support for G-sync from other non-module screens.

There have been 3 generations of the G-sync module produced by NVIDIA to date, although when discussed, the first two generations are normally merged in to a single “Version 1” label as they are so similar. With v2 representing the real step change in capability.

Above: The G-sync v1 and v2 modules

Using a hardware G-sync module in place of a traditional scaler has some positives and negatives. It is somewhat limited by the available video connections with only a single DisplayPort and single HDMI connection offered currently, no matter whether it’s a v1 or v2 module. In contrast, screens without the chip can support other common interfaces like DVI and VGA, while also allowing support for the latest USB type-C connection which is becoming increasingly popular.

Due to the lack of a traditional scaler, some OSD options might be unavailable compared with a normal screen, so things like Picture In Picture (PiP) and Picture By Picture (PbP) are not supported. The active cooling fan used so far for the v2 module has also been criticised from those who like a quieter PC, or where manufacturers have used a noisy fan.

On the plus side, by removing the traditional scaler it does seem that all hardware G-sync module screens have basically no input lag. We have yet to test a G-sync screen that showed any meaningful lag, which is a great positive when it comes to gaming.

NVIDIA G-sync screens with the hardware module generally have a nice wide variable refresh rate (VRR) range. You will often see this listed in the product spec as something like “40 – 144Hz”, or confirmed via third party testing. We have seen lots of FreeSync screens, particularly from the FreeSync 1 generation, with far more limited VRR ranges. NVIDIA also seem to be at the forefront of bringing the highest refresh rate gaming monitors to market first, so you will often see the latest and greatest models with G-sync support a fair while before alternative FreeSync options become available.

Overclocking Capabilities

Overclocking of refresh rates on some displays has been made possible largely thanks to the G-sync module. The presence of this module, and absence of a traditional scaler has allowed previously much slower panels to be successfully overclocked to higher refresh rates. For instance the first wave of high refresh rate 34″ ultrawide screens like the Acer Predator X34 and Asus ROG Swift PG348Q had a 100Hz refresh rate, but were actually using older 60Hz native panels. The G-sync module allowed a very good boost in refresh rate, and some excellent performance improvements as a result. This pattern continues today, as you will often see screens featuring the G-sync module advertised with a normal “native” refresh rate, and then an overclocked refresh rate where the panel has been boosted. For instance there’s quite a lot of 144Hz native screens which can be boosted to 165Hz or above thanks to the G-sync module.

The above is allowing an overclock of the LCD panel, while operating the G-sync module within its specifications. We should mention briefly the capability to also overclock the G-sync module itself, pushing it a little beyond its recommended specs. This has only been done once in this way once as far as we know, with the LG 34GK950G. That screen featured a 3440 x 1440 resolution panel with a natively supported 144Hz refresh rate, but it was combined with the v1 G-sync module. This was presumably to help avoid increasing costs of using the v2 module, especially as providing HDR support was not a priority. With the 3440 x 1440 @144Hz panel being used, this was beyond the bandwidth capabilities of the v1 module and so natively the screen will support up to 100Hz. It was however possible to enable an overclock of the G-sync module via the OSD overclocking feature on the monitor, pushing the refresh rate up to 120Hz as a result. The panel didn’t need overclocking here, only the G-sync module. We mention this only in case other monitors emerge where manufacturer opt to use the v1 module for cost saving benefits, but need to push its capabilities a little beyond its native support. It does seem that the chip is capable of being overclocked somewhat if needed.

Response Times and ULMB

From our many tests of screens featuring the hardware G-sync module, the response times of the panels and the overdrive that is used seems to be generally very reliable and consistent, producing strong performance at both low and high refresh rates. This seems to be more consistent than what we have seen from FreeSync screens so far where often the overdrive impulse is impacted negatively by changes to the screens refresh rate. NVIDIA also talk about how their G-sync technology allows for “variable overdrive” where the overdrive is apparently tuned across the entire refresh rate range for optimal performance.

G-sync modules also often support a native blur reduction mode, dubbed ULMB (Ultra Low Motion Blur). This allows the user to opt for a strobe backlight system if they want, in order to reduce perceived motion blur in gaming. It cannot be used at the same time as G-sync since ULMB operates at a fixed refresh rate only, but it’s a useful extra option for many of these G-sync module gaming screens. Of course since G-sync/ULMB are an NVIDIA technology, it only works with specific G-sync compatible NVIDIA graphics cards. While you can still use a G-sync monitor from an AMD/Intel graphics card for other uses, you can’t use the actual G-sync or ULMB functions.

G-sync Performance

There are plenty of reviews and tests of G-sync online which cover the operation of G-sync in more detail. Our friends over at Blurbusters.com have done some extensive G-sync testing in various games which is well worth a read.

It should be noted that the real benefits of G-sync really come into play when viewing lower frame rate content, around 45 – 60fps typically delivers the best results compared with Vsync on/off. At consistently higher frame rates as you get nearer to 144 fps the benefits of G-sync are not as great, but still apparent. There will be a gradual transition period for each user where the benefits of using G-sync decrease, and it may instead be better to use the ULMB feature if it’s been included, which is not available when using G-sync. Higher end gaming machines might be able to push out higher frame rates more consistently and so you might find less benefit in using G-sync. The ULMB could then help in another very important area, helping to reduce the perceived motion blur caused by LCD displays. It’s nice to have both G-sync and ULMB available to choose from certainly on these G-sync enabled displays. Soon after launch NVIDIA added the option to choose how frequencies outside of the supported range are handled. Previously it would revert to Vsync on behaviour, but the user now has the choice for various settings including Fast Sync, V-sync, no synchronisation and allowing the application to decide.

G-sync can be used in both full screen and windowed modes as well for the same tear-free experience.

G-Sync Activation

Above: G-sync options in the NVIDIA control panel

G-sync HDR

With the release of the “v2 module” NVIDIA added support for High Dynamic Range (HDR) to their hardware which is not supported from the v1 module. This allows support for HDR10 content along with G-sync VRR from high end NVIDIA graphics cards and systems.

Their system requirements at the time of writing stipulate a GeForce GTX 1050 GPU or higher with a DisplayPort 1.4 interface, along with Windows 10 for your Operating system. It should be noted that the G-sync v2 module is quite significantly more expensive than the v1 module, estimated to add around £400 – 600 to the retail price of a display featuring it. As we mentioned earlier the v2 module currently requires an active cooling fan which some people dislike. It has only been used in a hand full of screens so far, so it remains to be seen whether alternative passive cooling options will be used in the future.

We have seen a few G-sync HDR certified displays released or announced so far, but the current approach seems to be aimed primarily at super high-end. Models like the Asus ROG Swift PG27UQ for instance feature the NVIDIA G-sync HDR chip and support and have been combined with a Full Array Local Dimming (FALD) backlight for optimal HDR experience. While there’s no specific limitation in using another form of local dimming backlight like edge-lit with the NVIDIA G-sync HDR module, at this time it seems to only have been used with FALD if any meaningful HDR is to be featured at all. We expect in the future there will be v2 module screens supporting HDR but with edge-lit local dimming capabilities, but so far none have been released.

We have seen some other screens such as the Acer Predator XB273K released featuring the v2 module, but without any true HDR capabilities, presumably to help cut down on the retail price and production costs. That model is listed with the VESA DisplayHDR 400 badge, but this is largely meaningless and features no local dimming capability.

AMD FreeSync

FreeSync was not launched until a bit later than NVIDIA G-sync, on 19th March 2015, with the first screen we tested being the BenQ XL2730Z. Like NVIDIA G-sync this is all about providing support for variable refresh rates, reducing tearing, and without the lag and stuttering that older Vsync options created. Unlike NVIDIA G-Sync’s method, which requires the adoption of discrete chips, FreeSync focuses on the link interface standards. It has integrated the DisplayPort Adaptive-Sync industry standard, which allows real-time adjustment of refresh rates through the DisplayPort 1.2a (and above) interface. The newest HDMI interface specification also supports FreeSync. Although FreeSync synchronization technology does not require adding extra chips to the monitors, it must be matched with DisplayPort or HDMI compatible monitors and Radeon graphics cards in order to function. This is one advantage of FreeSync over G-sync, as it can be supported over HDMI if you need as well as DisplayPort.

This helps avoid additional production costs, and helps to keep retail costs of the screens lower (relative to a G-sync equivalent). It is basically free to implement, having no licensing fees to monitor OEMs for adoption, no expensive or proprietary hardware modules, and no communication overhead. As a result you will see FreeSync support included in a much wider range of screens, even those not really aimed at gaming. Being free to add, manufacturers often just include it regardless. AMD keep a list of FreeSync capable displays on their website here, including confirmation of the VRR range.

Many displays with Adaptive-Sync / FreeSync offer somewhat limited real-life support for VRR. In some cases there is a narrow VRR operating range, where the VRR feature may only work when the game frame rate is in a narrow, very specific range which is often not the case, as game frame rates vary significantly from moment to moment. In addition, not all monitors go through a formal certification process (certainly from the initial FreeSync 1 generation), display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably-improved experience. In theory you can use FreeSync from any screen capable of supporting Adaptive-Sync, but the actual VRR performance can really vary.

FreeSync 2

Due partly to the lack of certification standards for the FreeSync 1 generation, AMD later released FreeSync 2 in January 2017. This thankfully does include a “meticulous monitor certification process to ensure exceptional visual experiences” and guarantees things like support for Low Frame Rate Compensation (LFC) and support for HDR inputs. AMD also talk about monitors with FreeSync 2 as having low latency. You will see many screens carry the FreeSync 2 badge nowadays, meaning you can have more faith in their VRR performance at the very least.

- A screen must support HDR content to carry the FreeSync 2 badge, although this need not be any meaningful HDR as we will talk about in a moment. It is a requirement of the FreeSync 2 standard though.

- A screen must include LFC to be FreeSync 2 certified.

Performance

While the VRR range and performance might be more consistent with modern FreeSync 2 certifications, some of the other benefits associated with NVIDIA G-sync may not apply to these alternative screens. As there is no hardware G-sync module added to the screen, a normal scaler chip is used and this can in some cases result in additional input lag. You will still find plenty of FreeSync screens with low lag, but you will need to check third party tests such as our reviews to be sure. It’s not as simple as with G-sync screens where the presence of that hardware module basically guarantees there will be no real input lag. With FreeSync screens we are more reliant on the manufacturer focusing in reducing lag than on G-sync screens.

Above: PiP and PbP options

By adding a traditional scaler, FreeSync screens are capable of supporting additional features like Picture In Picture (PiP) and Picture By Picture (PbP). They will also typically have more hardware aspect ratio control options. It will also give manufacturers more flexibility to add additional video interfaces, not being limited to 1x DisplayPort and 1x HDMI like G-sync module screens are.

The control of the monitors overdrive impulse seems to be a bit varied with FreeSync displays. Early models (and potentially some that are available today) had a bug which meant that if you connected the screen to a FreeSync system, the overdrive would be turned off completely! Even disabling the FreeSync option in the AMD control panel didn’t help, you had to instead “break” the FreeSync chain by using a non-FreeSync card, or a different connection or something. Thankfully that early bug hasn’t been something we’ve seen problems with in more recent times. Many manufacturers also include an option to disable and enable FreeSync within the monitor OSD which can help create that firmer option to disable it if you ever want to, independent to the graphics card software.

We have seen quite a lot of variable performance when it comes to pixel response times from FreeSync screens, and they do seem to be a lot more hit and miss than G-sync equivalents. On G-sync screens you commonly get response times that remain strong and consistent across all refresh rates. Sometimes the response times will be controlled more dynamically, increasing the overdrive impulse as the refresh rate goes up. On FreeSync screens we have seen many where the overdrive impulse seems to be controlled in the opposite way oddly, where it is turned down when the refresh rate goes up. This can help eliminate overshoot problems but can often lead to slower response times at the higher refresh rates where you really need them to be faster! You will again have to rely on third party testing like that in our reviews, but it’s something we’ve seen from quite a few FreeSync screens.

On NVIDIA G-sync screens the ULMB blur reduction feature is associated with the hardware chip added to the monitor, and so many G-sync capable screens also offer ULMB as well. Since there is no added chip on FreeSync screens manufacturers have to provide blur reduction modes entirely separately, which has meant they are far less common on FreeSync screens than on G-sync screens. Again we are reliant on the manufacturers to focus on this if blur reduction is a requirement.

On a positive side, there doesn’t seem to be a specific reason for this that we can tell, but you will sometimes see a FreeSync version of a screen include a factory calibration for improved setup, colour accuracy etc; where the G-sync version does not. The LG 34GK950F and G are good examples of this with the F model including a factory calibration. We believe this to be related to the use of the v1 module, which perhaps has some limitation in terms of factory calibration profiling. We cannot think of any v1 G-sync screen which includes a specific factory calibration process or report to be honest. We have seen some v2 module screens with factory calibration though like the Asus ROG Swift PG27UQ, so it is at least possible on those v2 module screens. Maybe it’s a limitation, or just a manufacturer choice but it still seems to be a common pattern when comparing G-sync and FreeSync equivalent screens.

HDR Support

While the FreeSync 2 standard now includes support for HDR content, we have yet to see any displays pair FreeSync with higher end FALD backlights. You will find some FreeSync screens with local dimming support for HDR, but they are all currently edge-lit solutions of varying quality and use. FALD seems to be so far limited to G-sync HDR screens at the top end so if you want the best local dimming control for HDR content, you would need to select a G-sync screen at the moment.

Because FreeSync 2 can support an HDR input source, it has unfortunately led to what we consider to be a fairly widespread abuse of the HDR term in the market. We’ve talked in a separate article (well worth a read!) about our concerns with the VESA DisplayHDR standards, and in particular their HDR400 certification. It doesn’t take much to label a screen with HDR400 support and mislead consumers in to thinking they are buying a screen which will offer them a good HDR experience. Unfortunately most of these so-called HDR screens offer no real HDR benefits, for reasons explained in our other article. If you see an NVIDIA G-sync screen marketed with HDR, it almost certainly has a high end local dimming solution (at least at the time of writing it does). Whereas a FreeSync 2 screen with HDR might be meaningless.

Activation

AMD FreeSync can support dynamic refresh rates between 9 and 240Hz but the actual supported ranges depend on the display, and this does vary. When you connect the display to a compatible graphics card, with the relevant driver package installed the display is detected as FreeSync compatible and presents options within the software. You will normally see the supported FreeSync range listed, and can turn the setting on and off as shown above. You may also have to enable FreeSync from within the monitor OSD on many screens before this option is available in the graphics card. You may also need to configure it within a given application as explained on AMD’s website here.

We don’t want to go into too much depth about game play, frame rates and the performance of FreeSync here as we will end up moving away from characteristics of the monitor and into areas more associated with the operation of the graphics card and its output. FreeSync is a combined graphics card and monitor technology, but from a monitor point of view all it is doing is supporting this feature to allow the graphics card to operate in a new way. We’d encourage you to read some of the FreeSync reviews and tests online as they go into a lot more detail about graphics card rendering, frame rates etc as well.

G-sync Support from FreeSync Screens

In January 2019 NVIDIA surprised the market by opening up support for VRR from their graphics cards on other non-G-sync displays featuring the Adaptive-Sync capability. This created a whole new world of opportunities for consumers, who can now benefit from the dynamic refresh rate performance from their NVIDIA card, but are no longer restricted to selecting a screen with the added G-sync hardware module. There are far more Adaptive-Sync/FreeSync displays available, and often with more connections and additional features available, not to mention at a comparatively lower retail cost. You have to have a modern NVIDIA graphics card and operating system to make use of this G-sync support, needing a GTX 10-series or RTX 20-series of later.

There is a challenge with opening up this support on non-G-sync module displays, in that the performance of FreeSync screens can often be very varied. Some of very limited VRR ranges, some have issues with flickering and artefacts in practice. This has really come about due to the lack of any real certification or testing scheme for the first wave of FreeSync screens (before FreeSync 2 was introduced) and so you do have to be a bit careful when selecting a FreeSync screen if you want to use it for VRR – whether from an AMD card or from an NVIDIA card via this new support. This is contrary to NVIDIA G-sync module screens which go through thorough testing and certification thanks to the added hardware chip to ensure performance standards are met.

“G-sync Compatible”

Because there is such a wide range of FreeSync screens on the market, NVIDIA have come up with an additional certification scheme called “G-sync Compatible“. They will test monitors that deliver a baseline VRR experience and if the certification is passed, they will activate their VRR features automatically in the NVIDIA control panel. NVIDIA keep an up to date list of monitors that are officially G-sync Compatible, and they’ll continue to evaluate monitors and update the support list going forward. G-sync Compatible testing validates that the monitor does not show blanking, pulsing, flickering, ghosting or other artefacts during VRR gaming. They also validate that the monitor can operate in VRR at any game frame rate by supporting a VRR range of at least 2.4:1 (e.g. 60Hz-144Hz), and offer the gamer a seamless experience by enabling VRR by default. So a screen which carries this official “G-sync Compatible” badge should in theory offer reliable VRR experience at least. It at least has been through many tests and checks from NVIDIA to earn that badge.

Basic G-sync Support

Many displays with Adaptive-Sync offer somewhat limited real-life support for VRR. In some cases there is a narrow VRR operating range, where the VRR feature may only work when the game frame rate is in a narrow, very specific range. Which is often not the case, as game frame rates vary significantly from moment to moment. In addition, not all monitors go through a formal certification process, display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably-improved experience. In theory you can use G-sync from any screen capable of supporting Adaptive-Sync, but the actual VRR performance can really vary. This is also an issue when using AMD graphics cards for the same reason, if the screens VRR experience is not up to much.

NVIDIA say that they have tested over 400 Adaptive-Sync displays and found that only a small hand full could be certified under their “G-sync compatible” scheme (currently up to 14 at the time of writing), which implies that the rest offer a lower level of VRR experience. For VRR monitors yet to be validated as G-sync Compatible, a new NVIDIA Control Panel option will enable owners to try and switch the tech on – it may work, it may work partly, or it may not work at all. We will label this more generically as “G-sync Support” for simplicity. You can find plenty of tests and reports online from owners of different FreeSync screens and how they perform from NVIDIA graphics cards.

You need to be mindful of how displays are marketed with this general G-sync Support, when it hasn’t been officially tested and certified by NVIDIA as “G-sync Compatible”. We have seen some manufacturers list their displays misleadingly as “G-sync Compatible” when officially they have not earned the certification and do not appear on NVIDIA’s list. We expect the manufacturers would be violating NVIDIA’s certification policy by labelling their screens in this way, when in fact what they are offering is the more standard “G-sync support” from their model. We don’t like to see that approach and thankfully many manufacturers are not doing this. To be sure whether a screen has been certified by NVIDIA as G-sync Compatible, we would recommend checking their website and the latest list of confirmed monitors. If it hasn’t, and G-sync is listed at all, chances are it will be supported from an NVIDIA card for some kind of VRR experience, but it may not be great.

G-sync Ultimate

For the absolute best gaming experience NVIDIA recommend NVIDIA G-sync and the newly certified “G-sync Ultimate” monitors: those with the v2 G-sync hardware modules and that have passed over 300 compatibility and quality tests, and feature a full refresh rate range from 1Hz to the display panel’s max refresh rate, plus other advantages like variable overdrive, refresh rate overclocking, ultra low motion blur display modes, and industry-leading HDR with 1000 nits, Full Array Local Dimming (FALD) backlights and DCI-P3 colour gamut. You can see from the image above that this includes (and at the time of writing is currently limited to) the Asus ROG Swift PG27UQ, Acer Predator X27 and new 65″ BFGD HP Omen X Emerium 65 screen. Clearly these are the very expensive, high end HDR displays but these are classified as the ultimate in gaming experience by NVIDIA.

What’s Next for the G-sync Module?

NVIDIA tell us that they are still planning to develop normal G-sync screens with the hardware module and have no plans to drop this technology. This hardware chip still ensures a quality and reliable VRR experience for NVIDIA users, while also delivering some of the additional benefits outside of the VRR support that we talked about above. We expect to see G-sync screens continue to be produced but perhaps aimed more at the upper end of the gaming market, leaving the lower and middle end to be a little more open thanks to the “G-sync Compatible” and “G-sync Support” schemes. The typical £100 – 200 saving on the cost of a monitor is likely to be attractive in the lower/middle range where VRR can still be supported regardless of the graphics card vendor. We would hope that more manufacturers of Adaptive-sync/FreeSync screens invest in developing solid VRR implementations to ensure certification under the G-sync Compatible scheme, which will give consumers more faith in the performance of their models. They should also focus on ensuring that lag is low and additional features like blur reduction backlights are considered and included where possible.

We expect many of the cutting edge gaming screens to appear with traditional G-sync module inclusion before FreeSync alternatives are available, including the latest and greatest high refresh rates. That is part of the market where the G-sync module seems to have a firm grasp right now. Usage of the G-sync v2 module also seems to be a requirement so far for delivering the top-end HDR experience in the monitor market, with all current FALD backlight models featuring this chip. We expect to see the v2 module used primarily for the really top-end, premium screens with high resolutions, high refresh rates and likely FALD HDR support. The v2 module may also be used in some modern screens where the resolution and refresh rate demand it (and need a DisplayPort 1.4 interface), but where top-end HDR is not necessarily a requirement. This might be cost prohibitive in some cases, as the chip is expensive to start with. While there are savings to be made by not including HDR or a FALD backlight, the v2 module cost may be prohibitive in some cases.

In our opinion we feel that the v1 module also has some life left in it. Yes, there are some screens which are now pushing the bandwidth boundaries due to high resolutions and refresh rates – the LG 34GK950G being a prime example. However, there are still plenty of smaller screens or models with a resolution and refresh low enough to make the v1 chip viable. The v1 chip can’t support HDR, although most of the screens advertised as supporting HDR in the market don’t offer any meaningful HDR anyway. We expect to see the v1 module used for various middle end screens for a little while longer.

Whether or not NVIDIA produce a new version of the module remains to be seen, but it’s possible they might consider one with the latest video interfaces (DP 1.4 / HDMI 2.0) offered from the v2 module, but potentially without the HDR support if it helps create a more affordable option. An improved v1 module almost. This is not confirmed, and pure speculation at this time. Alternatively the v2 module cost would need to come down considerably to make it more viable for manufacturers to use. The £100 – 200 premium for the v1 module seems to have been palatable from consumers over the years, but the current estimated £500 – 600 premium for the v2 module could cause problems longer term.

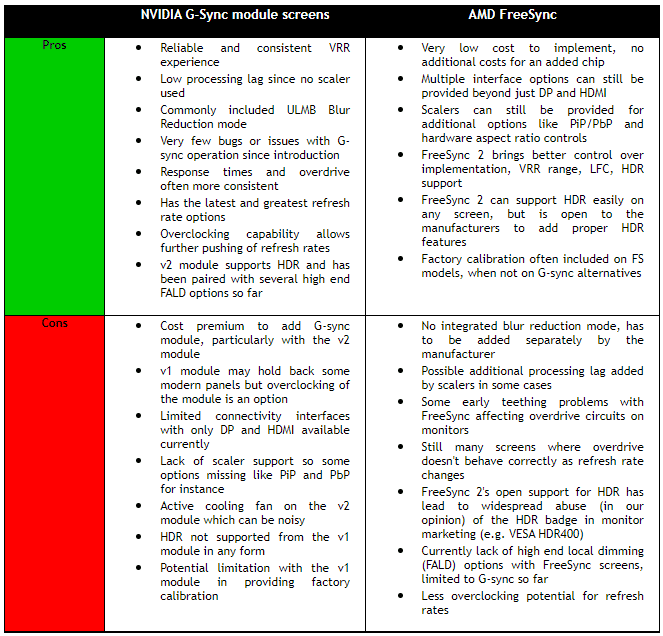

G-Sync vs. FreeSync Comparison

image courtesy of MSI.com

G-Sync vs. FreeSync Reference Table

| If you appreciate the article and enjoy reading and like our work, we would welcome a donation to the site to help us continue to make quality and detailed reviews and articles for you. |