High Dynamic Range (HDR)

Originally published 15 January 2017. Last updated 30 January 2019

Introduction – What is HDR?

You will have heard the term HDR talked about more and more in the TV market over the last few years, with the major TV manufacturers launching new screens supporting this feature. It’s become the ‘next big thing’ in the TV space, with movies and console games being developed to support this new technology. Now HDR has started to be more widely adopted in the desktop monitor space as well and we are starting to see an increase in the talk of HDR support. This will provide support for HDR gaming from PC’s and consoles, as well as movies and multimedia. We thought it would be useful to take a step back and look at what exactly HDR is, what it offers you, how it is implemented and what you need to be aware of when selecting a display for HDR content. We will try to focus here more on the desktop monitor market than get too deep in to the TV market, since that is our primary focus here at TFT Central.

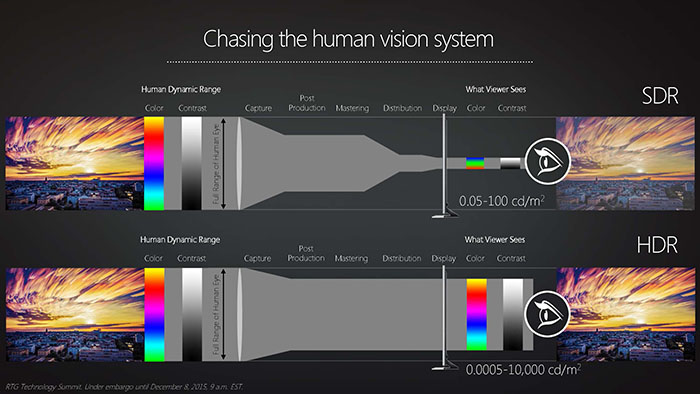

Trying to put this in simple terms, ‘High Dynamic Range’ refers to the ability to display a more significant difference between bright parts of an image and dark parts of an image. This is of significant benefit in games and movies where it helps create more realistic images and helps preserve detail in scenes where otherwise the contrast ratio of the display may be a limiting factor. On a screen with a low contrast ratio or one that operates with a “standard dynamic range” (SDR), you may see detail in darker scenes lost, where subtle dark grey tones become black. Likewise in bright scenes you may lose detail as bright parts are clipped to white, and this only becomes more problematic when the display is trying to produce a scene with a wide range of brightness levels at once. NVIDIA summarizes the motivation for HDR nicely in three points: “bright things can be really bright, dark things can be really dark, and details can be seen in both”. This helps produces a more ‘dynamic’ image, hence the name. These images are significantly different, providing richer and a more ‘real’ images than standard range displays and content.

The term HDR has been more broadly associated with a range of screen improvements in terms of marketing material, offering not only the improvements in contrast between bright and dark areas of an image but also improved colour rendering and a wider colour space. So when talking about HDR in the display market the aim is to produce better contrasts between bright and dark, as well as more colourful and lifelike image.

Note that pictures provided in this article showing Standard vs High Dynamic Range are for indicative purposes only, as it’s not possible to truly capture the affects and then view them on a standard range display. So they are intended as a rough guide to give you an idea of the differences.

High Dynamic Range Rendering

Linked to HDR is the term ‘High Dynamic Range Rendering’ (HDRR), which describes the rendering of computer graphics scenes by using lighting calculations done in high dynamic range. As well as the contrast ratio benefits we’ve already discussed in the introduction, HDR rendering is also beneficial in how it helps preserve light in optical phenomena such as reflections and refractions, as well as transparent materials such as glass. In Standard Dynamic Range rendering, very bright light sources in a scene (such as the sun) are capped at 1.0 (white). When this light is reflected the result must then be less than or equal to 1.0. However, in HDR rendering, very bright light sources can exceed the 1.0 brightness to simulate their actual values. This allows reflections off surfaces to maintain realistic brightness for bright light sources.

A typical desktop monitor based on a TN Film or IPS technology panel can offer a real-life static contrast ratio of around 800 – 1200:1, while a VA technology panel can range between 2000 and 5000:1 commonly. The human eye can perceive scenes with a very high dynamic contrast ratio, around 1 million:1 (1,000,000:1). Adaptation to altering light is achieved in part through adjustments of the iris and slow chemical changes, which take some time. For instance, think about the delay in being able to see when switching from bright lighting to darkness. At any given time, the eye’s static range is smaller, at around 10,000:1. However, this is still higher than the static range of most display technologies including VA panels and so this is where features like HDR are needed to extend that dynamic range and deliver higher active contrast ratios.

Content Standards and HDR10

One area of the HDR market that is still quite murky is content standards, those being the way in which content is produced and delivered to compatible displays. There are two primary standards that you will hear most about and those are HDR10 and Dolby Vision. We won’t go in to endless detail here, but Dolby Vision is considered to offer superior content as it supports dynamic metadata (the ability to adjust content on a dynamic basis, frame by frame) and 12-bit colour. However it is proprietary and carries a licensing fee, and also originally required additional hardware to be available to play it back so was more expensive to support. HDR 10 on the other hand only supports static metadata from content and 10-bit colour but is an open-source option which has therefore been more widely adopted so far. Microsoft and Sony for instance have adopted HDR10 standards for their modern games consoles. It is also the default standard for Ultra HD Blu-ray discs.

You don’t need to worry that this is going to turn in to another HD DVD vs. Blu Ray war, as actually despite the varying content standards it is relatively easy for a display to support multiple formats. In the TV market it is quite common to see screens which will support both Dolby Vision and HDR10, as well as often the other less common standards like Hybrid Log Gamma (HLG) and Advanced HDR.

Samsung have more recently started to drive development of the so-called HDR10+ standard which is designed to address some of the earlier implementation shortcomings, adding things like dynamic metadata for instance. On the other side of things Dolby Vision has recently moved their standard entirely to software, removing some of the complications with hardware requirements and associated additional costs.

When it comes to viewing differently rendered content, you need a display which supports the relevant standard. HDR10 compatible displays are very common and that is very widely supported content. Dolby Vision is less common, although some TV sets will advertise support and include it for those who want to use Dolby Vision encoded content. The monitor market seems to be focused on HDR10 for the time being though and we have yet to see a screen advertised for Dolby Vision content support. It will presumably only be a matter of time.

When selecting a display you may want to consider the source of your HDR material, and the format that is designed to output. Whether that is an HDR-compatible Blu-Ray player, streaming video service like Amazon Prime Video, games console or PC game.

Achieving High Dynamic Range and Improving Contrast Ratio

Global Dimming and Dynamic Contrast Ratios

You will probably be familiar with the term “Dynamic Contrast Ratio” (DCR), a technology which has been around now for many years and is widely used in the monitor and TV market, although something which has fallen out of favour in more recent times. Dynamic Contrast Ratios are based on the ability of a screen to brighten and dim the backlight unit (BLU) all at once depending on the content on the screen. This “Global Dimming” operates in brighter scenes by turning the backlight up, and in darker scenes turning it down. Sometimes the backlight will even be turned off completely if the scene is black. That’s rare of course in real content, but can be achieved in test environments for the sake of being able to produce even lower black points – because the screen is basically turned off! This allows manufacturers to promote their extremely high dynamic contrast ratio specification numbers, where they can then compare the difference between the brightest white (at maximum backlight intensity) vs. the darkest black (where the backlight is turned right down, and sometimes even turned off). This technique became very prevalent and we started to see crazy DCR numbers being quoted by screen manufacturers, up in the millions:1. In practice and actual use, the regular altering of the backlight intensity can prove distracting in real-life and many people didn’t like the feature at all and just turned it off. It didn’t really do a great deal to extend the dynamic range or perceived contrast ratio as no matter what the active backlight intensity was, you were still left with the same static contrast ratio at any one point in time, and therefore the differences at that point in time between dark and bright were still the same.

Local Dimming

In more recent times, to try and overcome some of the ongoing contrast ratio limitations of LCD displays you will often hear the term “Local Dimming” used by manufacturers. This local dimming can be used to dim the screen in “local” parts, or zones, dimming regions of the screen that should be dark, while keeping the other areas bright as they should be. This can help improve the apparent contrast ratio and bring out detail in darker scenes and shadow content. Local dimming is the foundation for producing an HDR experience on a display.

Where local dimming is used, one area you might want to be concerned about is how fast the local dimming can respond. Screens aimed at HDR gaming for instance need a very responsive local dimming backlight, to account for rapid content changes and to ensure the dimming can keep up with the likely high frame rates and refresh rate of the display. If the local dimming is not fast enough, it can lead to obvious “blooming” and other issues. The speed of the local dimming is independent of the type of backlight used, but the more zones there are, the potentially more complex it is for the manufacturer to get the backlight dimming response times fast enough and consistent enough. When you throw in variable refresh rate (VRR) technologies like NVIDIA G-Sync and AMD FreeSync, the operation of local dimming backlights may become more complex still. Manufacturers are unlikely to list the speed or responsiveness of their local dimming backlights, so you will have to rely on third party independent testing (like ours) to examine how a local dimming backlight performs.

Edge-Lit Local Dimming

Representation of edge-lit local dimming zones on the C32HG70

There are various ways that a backlight can be dimmed in more local regions. The simplest and cheapest approach is to use “Edge-Lit Local Dimming“. In this method all the LED’s are situated along the edge of the display facing the centre of the screen. The edge lighting is divided in to a number of zones, offering control then of certain regions of the screen. The more zones, the better as it gives a more finite control over content being displayed. In some cases this can offer some improvement over a non local dimmed display, but often it doesn’t help at all. It can sometimes even make the image look worse if large areas of the screen are being changed at once. This can be influenced by the location of the LEDs, whether they are along all 4 edges or just the top/bottom or left/right sides for instance. This is often the only option where there are power limitations or where a thinner form factor is necessary like in some TV’s and certainly in laptops.

This method of local dimming is what has been implemented in most desktop monitors so far. It is not overly expensive or complex to introduce and offers a level of local dimming that allows for HDR to be promoted and marketed. It is fairly typical to see an 8-zone edge lit backlight being used so far in desktop monitors. For instance the Samsung C32HG70 we have reviewed features a local dimming backlight in this format.

Full Array Local Dimming (FALD)

A more optimal way to deliver this local dimming on an LCD screen is via a “Full-Array Local Dimming (FALD)” backlight system (pictured above), where an array of individual LEDs behind the LCD panel are used to light the display, as opposed to using any kind of edge-lit backlight. In the desktop monitor market, edge-lighting is by far the most common method, but there are some screens available already that feature a FALD.

It would be ideal for each LED to be controlled individually, but in reality with LCD screens they can only be split in to separate “zones” and locally dimmed in that way. Each zone is responsible for a certain area of the screen, although objects smaller than the zone (e.g. a star in the night sky) will not benefit from the local dimming and so may look somewhat muted. The more zones, and the smaller these zones are, the better control there is over the content on the screen.

Full array local dimming backlight representation on Acer Predator X27 monitor

There are some drawbacks of implementing full-array backlights. They are firstly far more expensive to utilise than a simple edge-lit backlight, and so expect to see retail costs of these supporting displays very high. For example the first desktop HDR display featuring a full array backlight system (384 zones) to be released (and which we have reviewed) was the 27″ Dell UP2718Q which has a current retail price of around £1400 GBP. The full-array backlight can’t be blamed on its own for this high price, as this display offers other high end and expensive features like 4K resolution, a wide gamut LED backlight, hardware calibration etc. However, you can bet that the use of a full-array backlight system with 384 zones is a large part of the production costs, and reason for the high retail price.

The FALD backlights also require more depth to the screen than an edge-lit backlight so you will see a step back from the ultra-thin profiles that have started to become the norm when these are first introduced. Power usage and heat output are also higher on these FALD backlights.

At the moment there’s only been a few monitors released or announced with a FALD backlight. 27″ 16:9 aspect ratio models with a 384-zone backlight, and 35″ 21:9 ultrawide models with a 512-zone backlight. We will talk about those in more detail shortly. Keep in mind that while a FALD backlight is better in theory, it may vary in how it performs in practice. Just because a screen has a FALD doesn’t mean it’s necessarily far better, it just has the potential to be if it’s well implemented.

Mini LED Backlights

A step beyond a FALD backlight is Mini LED. Mini LED offers much smaller chip sizes than normal LED and so can allow panel manufacturers to offer far more local dimming zones than even the current/planned FALD backlights that we’ve seen so far. Those FALD backlights have been limited to around 384 dimming zones on a typical 27 – 32″ model. The new mini LED backlight systems will support more than 1000 zones and also allow even higher peak brightness as well. They will also facilitate thinner screen profiles compared to FALD screens.

Mini LED was originally talked about being used initially in gaming screens for HDR benefits, but the latest plans from AU Optronics who are investing in Mini LED talk more about them being used for professional grade displays. We have already seen the 32″ Asus ProArt PA32UCX professional display announced featuring a 1000+ zone Mini LED backlight, although release date could still be some way off. A step beyond Mini LED is Micro LED, offering even smaller zones and a higher number as a result. Those are even further away and we mention them only for reference here.

Viewing HDR Content

HDR from a PC

It’s actually quite complicated to achieve an HDR output at the moment from a PC and something you should be aware of before jumping straight in to a modern HDR screen. You will need to ensure you have a compatible Operating System for a start. The latest Windows 10 versions for instance will support HDR, but from many systems you will see some odd behaviour from your monitor when it is connected. The image can look dull and washed out as a result of the OS forcing HDR on for everything. HDR content should work fine (if you can achieve it – more in a moment!) and provide a lovely experience with the high dynamic range and rich colours as intended. However normal every day use looks wrong with the HDR option turned on. Windows imposes a brightness limit of 100 cd/m2 on the screen so that bright content like a Word Document or Excel file doesn’t blind you with the full 1000 cd/m2 capability of the backlight. That has a direct impact on how the eye perceives the colours, reducing how vivid and rich they would normally look. It also attempts to map the common sRGB content to the wider gamut colour space of the screen causing some further issues. Sadly Windows struggles at the moment of turning HDR on/off when it detects HDR content, so for now it’s probably a case of needing to toggle the option in the settings section (settings > display > HDR and Advanced Color > off/on). Windows does seem to behave better when using HDMI connectivity so you may have more luck connecting over that video interface, where it seems to switch correctly between SDR and HDR content and hopefully negate the need to switch HDR on and off in the Windows setting when you want to use different content. This is not any fault of the display, and perhaps as HDR settles a bit more we will have better OS support emerge. That is a little fiddly in itself, but a current OS software limitation.

Another complexity of HDR content from a PC is graphics card support. The latest NVIDIA and AMD cards will support HDR output and even offer the appropriate DisplayPort 1.4 or HDMI 2.0a+ outputs you need. This will require you to purchase a top end graphics card if you want the full HDR experience, and there are some added complexities around streaming video content and protection which you might want to read up on further. There are graphics cards now available to provide that HDR option from a PC, but they are going to be expensive right now.

Finally, content support is another complex consideration from a PC. HDR movies and video including those offered by streaming services like Netflix, Amazon Prime and YouTube currently won’t work properly from a PC due to complicated protection issues. They are designed to offer HDR content via their relevant apps direct from an HDR TV where the self-contained nature of the hardware makes this easier to control. So a lot of the HDR content provided by these streaming services is difficult or impossible to view from a PC at the moment. Plugging in an external Ultra HD Blu-ray player or streaming device like Amazon Fire TV 4K with HDR support is thankfully simpler as you are removing all the complexities of software and hardware there, as the HDR feature is part of the overall device and solution.

PC HDR gaming is a little simpler, if you can find a title which supports HDR properly and have the necessary OS and graphics card requirements! There are not many HDR PC games around yet, and even those that support HDR in the console market will not always have a PC HDR equivalent. Obviously more will come in time, but it’s a little limited at the time of writing. All in all, it’s a complicated area for PC HDR at the moment.

External Blu-Ray Players and Games Consoles

Thankfully things are a bit simpler when it comes to external devices. The enclosed hardware/software system of an external Ultra HD Blu-ray player or streaming device (Amazon Fire TV 4K HDR etc) make this easy. They will output HDR content easily, you just need to have a display which is capable of displaying it and has a spec capable of supporting the various requirements defined by the HDR content. More on that in a moment.

The other area to consider here is console HDR gaming. Thankfully that part of the gaming market is a bit more mature, and it’s far simpler to achieve HDR thanks to the enclosed nature of the system – no software, graphics card or OS limitations to worry about here. If you have a console which can output HDR for gaming such as the PS4, PS4 Pro or X Box One S then the monitor will support those over the HDMI 2.0a connection.

HDR Standards and Certification – TV’s

While HDR content might be created to a certain standard, the actual display may vary in its spec and support of different aspects of the image. You will often see HDR marketed with TV screens and more recently, monitors, but specs and the level of HDR support will vary from one to the next.

To stop the widespread abuse of the term HDR in the TV market primarily, and a whole host of misleading advertising and specs, the UHD Alliance was set up. This alliance is a consortium of TV manufacturers, technology firms, and film and TV studios. Before this, there was no real defined standards for HDR and there were no defined specs to be worked towards by display manufacturers when trying to deliver HDR support to their customers. On January 4, 2016, the Ultra HD Alliance announced their certification requirements for a “true HDR display” in their view, with a focus at the time on the TV market since HDR had not started to appear in the monitor market. This encapsulates the standards defined for “true” HDR support, as well as then defining several other key areas manufacturers can work towards if they want to certify a screen overall under their brand as “Ultra HD Premium” certified. This Ultra HD Premium certification spec primarily focuses on two areas, contrast and colour.

Contrast / Brightness / Black Depth

There are two options manufacturers can opt for to become certified under this standard, accounting for both LCD and OLED displays. This covers the specific HDR aspect of the certification:

- Option 1) A maximum luminance (‘brightness’ spec) of 1000 cd/m2 or more, along with a black level of less than 0.05 cd/m2. This would offer a contrast ratio then of at least 20,000:1. This specification from the Ultra HD alliance is designed for LCD displays and at the moment, is the one we are concerned with here at TFT Central.

- Option 2) A maximum luminance of over 540 cd/m2 and a black level of less than 0.0005 cd/m2. This would offer a contrast ratio of at least 1,080,000:1. This specification is relevant then for OLED displays. At the moment, OLED will struggle to produce very high peak brightness, hence this differing spec. While it cannot offer the same high brightness that an LCD display might, its ability to offer much deeper black levels allows for HDR to be practical given the very high available contrast ratio.

In addition to the HDR aspect of the certification, several other key areas were defined if a manufacturer wants to earn themselves the Ultra HD Premium certification:

- Resolution – Given the name is “Ultra HD Premium” the display must be able to support a resolution of at least 3840 x 2160. This is often referred to as “4K”, although officially this resolution is “Ultra HD”, and “4k” is 4096 x 2160.

- Colour Depth Processing – The display must be able to receive and process a 10-bit colour signal for improved bit-depth. This offers the capability of handling a signal with over 1 billion colours. In the TV world you will often see TV sets listed with 10-bit colour or perhaps “deep colour”. This 10-bit signal processing allows for smoother gradation of shades displayed and since the TV doesn’t necessarily need to be able to display all these colours, only process the 10-bit signal, it’s not really an issue.

- Colour Gamut – As part of this certification, the Ultra HD alliance stipulate that the display must also offer a wider colour gamut beyond the typical standard gamut backlights. In the TV space, this would need to be beyond the standard sRGB / Rec. 709 colour space (offering 35% of the colours the human eye can see) which can only cover around 80% of the required gamut for the certification. The display needs to support what is referred to in the TV market as “DCI-P3” cinema standard (54% of what the human eye can see). This extended colour space allows for a wider range of colours from the spectrum to be displayed and is 25% larger than sRGB (i.e. 125% sRGB coverage). In fact, it is a little beyond Adobe RGB which is ~117% sRGB. As a side note, there is an even wider colour space defined which is called BT. 2020 and this is considered an even more aggressive target for display manufacturers for the future (~76% of what the human eye can see). To date, no consumer displays can reach anywhere near even 90% of BT. 2020, although many HDR content formats use it as a container for HDR content as it is assumed to be future proof. This includes the common HDR10 format. One to look out for in future display developments.

- Connectivity – A TV would require an HDMI 2.0 interface. This certification is designed for the TV market at the moment, but in the desktop monitor market DisplayPort is the common option, and certainly needed for the higher refresh rates (>60Hz) supported. We wouldn’t be surprised if this Ultra HD Premium certification perhaps got updated at some point to incorporate DisplayPort to allow it to be widely adopted in the monitor market as well.

Displays which officially reach these defined standards can then carry the ‘Ultra HD Premium’ logo which was created specifically for this cause. You need to be mindful that not all displays will feature this logo, but may still advertise themselves as supporting HDR. The HDR spec is only one part of this certification so it is possible for a screen to support HDR in some capacity, but not necessarily offer the other additional specs (e.g. maybe it doesn’t have the wider gamut support). Since a screen may be advertised with HDR, but not carry this Ultra HD Premium logo, it may be unclear how the HDR is being implemented and whether it can truly live up to the threshold specs that the UHD alliance came up with. In those situations you may get some of the benefits from HDR content, but not the maximum, full experience intended or defined here. HDR is just one part of the Ultra HD Premium spec, so you may well see HDR talked about without the rest of the certification spec being adhered to. That’s where it may get confusing, but rest assured that if a display features this certification and logo it has “fully fledged HDR”, at least in terms of how the Ultra HD Alliance perceive it should be, as part of its spec.

The Ultra HD Premium certification has been used in the TV market for a while now, and some desktop monitors have also used the same certification for their HDR performance. There are other more common standards in the desktop monitor market which were created by VESA, which we will talk about later.

Monitor HDR – Is there a “true” HDR?

It’s all very well having a defined standard under the Ultra HD Premium branding, but is that really the be all and end all of what a “true” HDR display should be? In the TV market, it has been quite well established as the requirement for so-called true HDR support. However if you take a step back a moment, we would argue that the way the HDR is achieved via the local dimming backlight option is just as important here. For instance you could have a display that meets all the specs of the Ultra HD Premium certification, but has a limited number of dimming zones through an edge-lit backlight system. That might technically meet the specs on paper, but the actual HDR experience in real life might be poor. On the other hand you might have a display with a very well implemented Full-array local dimming backlight (FALD) which doesn’t quite meet the Ultra HD Premium specs – perhaps it lacks the full Ultra HD resolution for instance as it’s a smaller display size. The FALD offers better local dimming control and the resulting HDR experience may be far superior to the display which conforms to the certification specs, but has a poor edge-lit local dimming system. That second display wouldn’t be classified as a “true” HDR display, despite being better in real practical use. The way the local dimming is achieved is important.

Things are a little more stable in the TV market now so ideally you would have a screen that has a decent backlight operation and carries the Ultra HD Premium certification. Just look out for differences in spec and how things are achieved and don’t rule something out just because on paper it doesn’t meet a defined standard.

But does this translate to the desktop monitor market? It’s more complicated here as well. For a start for the majority of desktop monitors we don’t feel that the Ultra HD 3840 x 2160 resolution is a necessity. On a large format TV it would be far more important but on a common 24 – 27″ sized desktop monitor you don’t need that kind of resolution. The image is perfectly sharp and crisp without it, and the screens can still handle content rendered in higher res (like an Ultra HD Blu-ray for instance) and scale it down without any noticeable quality loss, certainly if you move a little further away from the screen for a normal multimedia viewing distance. So this creates an issue with the Ultra HD Premium certification already.

Peak luminance is another area for debate. The Ultra HD Premium certification dictates a 1000 cd/m2 peak luminance support. That’s fine from a TV which you might view from several metres away, but what about from a desktop monitor where you are 1 – 2 feet away from the screen? The 1000 cd/m2 luminance is needed to meet the way content is being produced and provide maximum detail in brighter scenes, but actually from that close range might be hard on the eyes. There is an argument that maybe max luminance should be lower on desktop monitors, and although you might lose some detail in highlights and brighter content (but it still being far better than an SDR image), you avoid complications with it being uncomfortably bright from up close. We’re not saying one is right or wrong, it’s just a possible area for debate.

The Ultra HD Premium spec also has no consideration for typical PC DisplayPort interfaces at the moment, and while a screen can carry the necessary HDMI 2.0a+ which is then useful for external devices, they probably need to account for DisplayPort for PC connectivity. You could again in theory have a monitor designed for PC use only without any HDMI port, and while it has the necessary DP 1.4 for PC HDR compatibility, it will not be able to conform to the Ultra HD Premium certification as that demands, at the moment, a compatible HDMI connection.

Meaningful HDR on desktop monitors – our suggested requirements

There needs to be some kind of comparable HDR monitor certification scheme in the desktop monitor market (and VESA have had a stab at that as we will discuss in a moment), taking in to account some of these considerations and perhaps helping to avoid the black and white classification some people make when it comes to HDR. The “it doesn’t support Ultra HD Premium so it’s not ‘true’ HDR” argument isn’t as clear cut here we don’t think.

In our view right now, the ability of a desktop monitor to support HDR is probably ranked in the following order of importance:

| 1 | Local dimming of some kind is needed | It must have some kind of local dimming for a start, otherwise we would not consider it an HDR display. Global Dimming (i.e. dynamic contrast ratios) doesn’t count! |

| 2 | How the local dimming is achieved | Many zoned FALD preferred, with the more zones the better. Mini LED and future Micro LED backlights may help this even more. The local dimming must also be fast and responsive to keep up with content changes, especially if it’s a gaming display |

| 3 | Active contrast ratio | The whole point here is to achieve a higher active HDR contrast ratio, 20,000:1 or more feels a sensible aspiration as per the TV market. |

| 4 | Extended colour gamut support | This feels like an easy one, it should have a colour space greater than the normal sRGB, ideally >90% of the DCI-P3 reference. The boost in colour space makes a difference to the image appearance and is a key part of the overall HDR experience after the improved contrast ratios. |

| 5 | Peak luminance | The full 1000 cd/m2 might not be necessary or ideal. We still need a luminance beyond the typical 300 – 350 cd/m2 you see from SDR screens to offer improvements. 550 – 600 cd/m2 seems to be a common peak luminance from current panel manufacturers. |

| 6 | Colour depth | 10-bit colour depth is preferred for handling HDR content although it isn’t as important as the improved contrast ratio and wider gamut in our opinion. Displays with an 8-bit+FRC colour depth would also be fine as well. |

| 7 | Video connections | To support HDR you will need HDMI 2.0a+ or DisplayPort 1.4 anyway, but we would like to see DP considered when it comes to certifying displays. |

| 8 | Resolution | Ultra HD isn’t as important on smaller desktop screens but would still be useful for larger sized screens. |

HDR in the Desktop Monitor Market

We’ve already started to see the term HDR used in desktop monitors now, with its use becoming more prevalent in press releases for forthcoming displays. There is still a mix of specs from the monitor manufacturers, in the rush to promote their screens as supporting HDR – the new buzz word in this market. There are a lot of screens that are marketed for HDR but that actually offer very little benefit in real life, and lack most, or all of the necessary features and specs for a decent or true HDR experience.

“HDR input support” or “HDR10 support”

This is one of the most common marketing ploys used by display manufacturers, and reminds us a little of the early days of “HD ready” TV’s – those could accept an HD signal but lacked the necessary resolution to really display it properly! Saying a screen can “support an HDR input” or accept an “HDR10 source” is largely meaningless and something we feel is quite misleading for consumers. Being able to accept an HDR input signal over DisplayPort or HDMI is all very well, but the screen needs to be able to properly produce an HDR output as well. We have seen many displays released and marketed for HDR when they feature no real local dimming, lack the necessary colour gamut and colour depth, lack the ability to produce higher peak brightness’s and overall do nothing really do actually produce an HDR image.

For instance there is the BenQ EX3501R display (pictured above), marketed almost entirely based on its supposed HDR performance. While it can accept an HDR input signal, in reality there is no local dimming, no extension to the contrast ratio beyond the panel’s native figure, no extended peak brightness (300 cd/m2 maximum) and no 10-bit colour depth support. It does somewhat meet the requirements for a wider colour space, as there is an extended colour gamut covering about 85% of the DCI-P3 space, although oddly this is a spec BenQ don’t even promote and only uncovered through our independent testing. The point here is that the screen is marketed heavily as HDR but lacks nearly all of the features and specs which would produce an HDR image and experience.

Equally misleading is the use of the term HDR for models like the Dell S2718D monitor. Dell’s press release for this screen says at the bottom: “Dell’s HDR feature has been designed with a PC user in mind and supports specifications that are different from existing TV standards for HDR. Please review the specs carefully for further details.” So at least they seem to be open about saying it might not be the “full HDR” experience people are perhaps expecting or wanting. This screen offers only a 2560 x 1440 resolution, 400 cd/m2 brightness and only a normal 99% sRGB / Rec. 709 colour space. There’s no talk about local dimming or anything so it is anyone’s guess what they are offering here for so-called HDR support. Certainly none of the specs seem to line up with anything remotely near the longer-standing TV standards for HDR which you would perhaps hope manufacturers would at least aim for. Again, accepting an HDR input doesn’t mean much if the screen can’t then display it properly!

How to spot a screen with a meaningful HDR output

We’ve already listed in the previous section the features and specs we consider important in creating a meaningful HDR experience in the desktop monitor market. With a bit of luck you will see manufacturers specifically talk about local dimming if it is used, certainly if they have added any FALD. If it’s a FALD they will normally list how many dimming zones are used too. Look out for specs talking about extended peak brightness as well as that’s often a good indication that some kind of local dimming is used, as long as the peak is quite a lot higher than the standard brightness of the screen.

Colour specs are more routinely included, so look out for colour gamut specs with high DCI-P3 coverage, or something which is more than 115 – 120% sRGB coverage if that is the spec they have stuck to. You should be able to see if there is the relevant colour depth support too, as it’s common for manufacturers to list either 8-bit (sometimes 16.7m colours) or 10-bit (1.07b colours) in their specs. Resolution and video connections will also be included as standard.

Some standards have been introduced by VESA as well to help classify HDR in the desktop monitor market, which we will talk about in a moment. If a screen talks about its HDR capabilities, but doesn’t carry one of the VESA DisplayHDR certifications, hopefully the above guidance will help you identify a screens potential HDR capabilities.

NVIDIA G-Sync HDR

In January 2017 NVIDIA announced their new generation of G-sync. G-sync is a technology used to offer variable refresh rate support for compatible graphics cards and displays, helping to improve gaming performance and avoid issues like tearing and stuttering where frame rates in games can vary. It requires a G-sync module, or hardware chip, to be added to the monitor to operate. Their new generation of the G-sync module (commonly referred to as the v2 module) focuses on providing support for HDR, and is referred to as “G-sync HDR”. It also includes the latest video interfaces with DisplayPort 1.4 and HDMI 2.0 offered, and brings about higher bandwidth capabilities for the most modern high refresh rate / high resolution display options. NVIDIA have their own certification for screens which feature this new v2 module and meet their HDR requirements, so you will see the “NVIDIA G-sync HDR” branding and logo used. This may also be combined with other certifications the display manufacturer has added if they have also met other standards like Ultra HD Premium or one of the VESA DisplayHDR classes.

NVIDIA have produced this technology in partnership with panel manufacturer AU Optronics. Unlike HDR compatible TV’s, G-sync HDR monitors have been designed from the ground up, combining the benefits of G-sync with this new support for HDR content and therefore avoiding most of the input lag typically seen from a TV display. Furthermore, and perhaps most importantly for the HDR aspect of the display, it seems that the new G-sync HDR screens will incorporate a full array local dimming backlight system for maximum local dimming performance and an optimal HDR experience. At least those talked about so far do.

NVIDIA G-sync HDR displays will offer a colour gamut very close to the DCI-P3 reference colour space. They will provide the necessary wider gamut support (~125% sRGB) by making use of the recently developed Quantum Dot technology. Quantum Dot Enhancement Film (QDEF) can be applied to the screen to create deeper, more saturated colours. First used on high-end televisions, QDEF film is coated with nano-sized dots that emit light of a very specific colour depending on the size of the dot, producing bright, saturated and vibrant colours through the whole spectrum, from deep greens and reds, to intense blues. It’s a more economical, modern way of offering an extended colour gamut beyond the typical sRGB space without the need for an entirely different (and more expensive) wide gamut LED backlight. As such, you will see Quantum Dot technology used on a wide range of screens in all sectors, not just in the professional space where wide gamut backlights are sometimes used. Mainstream, multimedia and gaming screens can make use of Quantum Dot if the manufacturers choose and it is also independent of panel technology or backlight type. It can be added to a normal W-LED backlight screen to boost the gamut and colours, or it can also be added to a screen with a full-array backlight like those being developed in the G-sync HDR range discussed here. As a side note, having Quantum Dot does not necessarily mean the screen can support HDR. You will see many more Quantum Dot displays around already which do not offer HDR support, and certainly not any kind of full-array backlight. Those displays have used Quantum Dot to simply improve the colour gamut and offer more vivid and saturated colours, often desirable for gaming and multimedia. For HDR displays, Quantum Dot is just a method used commonly to bring about the colour gamut improvements necessary to meet the Ultra HD Premium standard as well. So as well as the support for HDR through the full-array backlight system with local dimming, NVIDIA are combining it with Quantum Dot technology to bring about a wider colour space.

There have been several displays released already from this new “G-sync HDR” range. First came the Asus ROG Swift PG27UQ (reviewed). This model uses a 384-zone FALD backlight and offers a 3840 x 2160 Ultra HD resolution, 1000 cd/m2 peak brightness and 125% sRGB colour space amongst other impressive specs. This includes a 144Hz native refresh rate (the first time with an Ultra HD res panel) as well. There are also some competing equivalent models from Acer, with their Predator X27, and from AOC with their AGON AG273UG. These are all 27″ models and it is great to see the use of a FALD backlight here to try and deliver a more optimal HDR experience.

There are also a couple of larger format screens announced and expected during H1 of 2019 with a 35″ ultrawide format and featuring a 512-zone FALD backlight. These displays will offer a 3440 x 1440 resolution (so, technically just under the Ultra HD 3840 x 2160 requirements), but will have a 1000 cd/m2 peak brightness and 90% DCI-P3 colour space. Models announced so far are the Acer Predator X35 and Asus ROG Swift PG35VQ.

AMD’s FreeSync 2

AMD have also introduced their latest development to their FreeSync variable refresh rate technology which has been around now since 2015. FreeSync 2 as it’s being called is now just about variable refresh rate, but accounts for High Dynamic Range (HDR) as well. It’s not designed to be a replacement for FreeSync, more of a side development focusing on what AMD, it’s monitor partners and game development partners can do to improve high end gaming.

Centre to this development is HDR support. As Brandon Chester over at Anandtech has discussed more than once, the state of support for next-generation display technologies under Windows is mixed at best. HiDPI doesn’t work quite as well as anyone would like it to, and there isn’t a comprehensive and consistent colour management solution to support monitors that offer HDR and/or colour spaces wider than sRGB. Recent Windows 10 updates have helped a bit, but that doesn’t fix all the issues and obviously doesn’t account for gamers still on older operating systems. Windows just doesn’t have a good internal HDR display pipeline and so this makes it hard to use HDR with Windows. The other issue is that HDR monitors have the potential to introduce additional input lag because of their internal processors required.

FreeSync 2 attempts to solve this problem by changing the whole display pipeline, to remove the issues with Windows and offload as much work from the monitor as possible. FreeSync 2 is essentially an AMD-optimized display pipeline for HDR and wide colour gamuts, helping to make HDR easier to use as well as performing better. This will also help reduce the latency and added input lag of the HDR processing. There’s a detailed article over at Anandtech which talks more about the development requirements and further technical detail which is well worth a read.

Because all of AMD’s FreeSync 1-capable cards (e.g. GCN 1.1 and later) already support both HDR and variable refresh, FreeSync 2 will also work on those cards. All GPUs that support FreeSync 1 will be able to support FreeSync 2. All it will take is a driver update. We have seen plenty of FreeSync 2 monitors released now. Looks out for models where HDR is talked about.

AMD’s approach to what makes an HDR display seems to be a bit more variable than NVIDIA’s. NVIDIA’s “G-sync HDR” certification is based on high end specs and features, conforming to high levels of HDR performance. FreeSync 2 displays can have varying levels of actual HDR performance, so just because a screen is marketed as FreeSync 2, or has FreeSync + HDR doesn’t mean it will necessarily offer anything meaningful in the way of HDR output. It’s back to trying to spot a decent HDR implementation from the specs and product pages, or perhaps relying on one of the VESA DisplayHDR certifications talked about in the next section.

VESA DisplayHDR Standards

The TV market has the Ultra HD Premium certification in place to try and provide a defined spec standard in that market for HDR TV’s. In the desktop monitor market, VESA introduced their own “DisplayHDR” certification system towards the end of 2017. This has been created with input from more than two dozen participants including AMD, NVIDIA, Samsung, Asus, AU Optronics, LG.Display, Dell, HP and LG for instance, and is the “display industry’s first fully open standard specifying HDR quality, including luminance, colour gamut, bit depth and rise time”.

Under the first release of the DisplayHDR version 1.0 there was a focus on LCD displays, leaving them room to define OLED and other technologies later when required. This was updated in January 2019 with certifications for possible forthcoming OLED monitors, as well as a new middle tier aimed at HDR laptops with LCD displays.

They have the following levels of certification under the DisplayHDR branding so far for LCD displays, designed to meet the requirements of low, medium and high end desktop monitors. These are the ones you will see most in the desktop monitor market and of most interest to us right now:

| What VESA say about the certification | What we say | |

“First genuine entry point for HDR” | Significant step up from SDR baseline:True 8-bit image quality – on par with top 15% of PC displays todayGlobal dimming – improves dynamic contrast ratioPeak luminance of 400 cd/m2 – up to 50% higher than typical SDRMinimum requirements for colour gamut and contrast exceed SDR | We don’t like this level. It doesn’t offer any real HDR experience as it requires no local dimming. Global dimming is not the same thing and most people don’t even like that technology or DCR’s. A maximum brightness of 400 cd/m2 is also hardly better than most normal monitors. It also doesn’t require a wider colour gamut beyond typical sRGB screens. In our opinion, this is a certification that leads to a lot of abuse in the monitor market and a whole host of “fake HDR” displays, misleading consumers who aren’t aware of the details. |

| True local dimming and high-contrast HDR at the lowest price point and thermal impact:Peak luminance of 500 cd/m2 – optimized for better thermal control in super-thin notebook displaysSame colour gamut, black level and bit-depth requirements associated with DisplayHDR 600 and DisplayHDR 1000 levelsIncludes local dimmingRequires 10-bit image processing | By the time you get up to the HDR 500 and 600 levels, things are a lot better. There is now a requirement for local dimming which is the foundation for creating an HDR experience. Edge Lit local dimming can be used to reach these certification levels, and so where you see HDR 500 / 600 that is probably a good indication that edge lit local dimming is used.An increased peak brightness of either 500 cd/m2 or 600 cd/m2 offers some nice improvements over normal monitor backlight brightness and can help with highlights in HDR content. There is also now a requirement for a wider colour gamut with 90%+ DCI-P3.These certification levels can be used on a screen where there is a meaningful HDR experience offered. They might not reach 1000 cd/m2+ peak brightness, and probably don’t have a FALD or lots of dimming zones, but they do offer a nice middle ground. | |

“Targets professional/enthusiast-level laptops and high-performance monitors” | True high-contrast HDR with notable specular highlights:Peak luminance of 600 cd/m2 – double that of typical displaysFull-screen flash requirement renders realistic effects in gaming and moviesReal-time contrast ratios with local dimming – yields impressive highlights and deep blacksVisible increase in colour gamut compared to already improved DisplayHDR 400Requires 10-bit image processing | |

“Targets professional/enthusiast/content-creator PC monitors” | Outstanding local-dimming, high-contrast HDR with advanced specular highlights:Peak luminance of 1000 cd/m2 – more than 3x that of typical displaysFull-screen flash requirement delivers ultra-realistic effects in gaming and moviesUnprecedented long duration, high performance ideal for content creationLocal dimming yields 2x contrast increase over DisplayHDR 600Significantly visible increase in colour gamut compared to DisplayHDR 400Requires 10-bit image processing | This top certification is very similar to the 500/600 levels, but requires a peak brightness support of 1000 cd/m2+ now. This is possible using local dimming, but many screens which have this HDR 1000 certification use FALD backlights to reach those levels. Again there is a requirement for a >90% DCI-P3 colour space too. |

They then also have the following levels of certification under the DisplayHDR branding for OLED displays:

| What VESA say about the certification | What we say | |

“Incredibly accurate shadow detail for a remarkable visual experience.“ | Deeper black levels and dramatic increases in dynamic range create a remarkable visual experience:Peak luminance of 400 cd/m2 or 500 cd/m2Brings permissible black level down to 0.0005 cd/m2 – the lowest level that can be effectively measured with industry-standard colorimetersProvides up to 50X greater dynamic range and 4X improvement in rise time compared to DisplayHDR 1000 | We have yet to see these used in the desktop monitor market due to the lack of OLED screens, but they seem sensible levels to work with. OLED’s will generally not be able to reach the high peak brightness that an LCD display can, and so that is why those specs are more limited at 400 or 500 cd/m2. However, an OLED is able to reach much deeper blacks, and so the actual HDR contrast will be much higher. OLED’s can also be dimmed at an individual pixel level, so you know you are getting an excellent local dimming experience without being restricted by only a few zones, or even a FALD. OLED HDR dimming surpasses what is possible from current LCD displays with FALD and other local dimming options. The OLED dimming is also much faster than FALD and other local dimming options. |

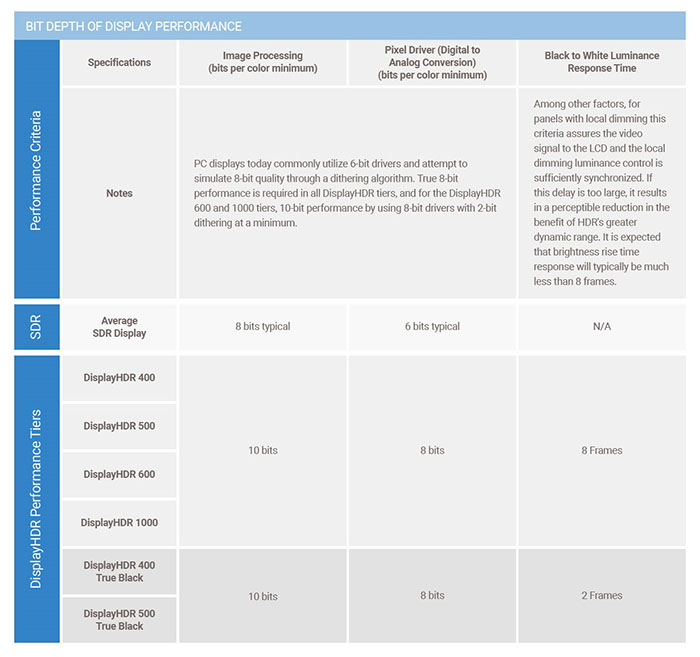

The performance criteria of all the current VESA DisplayHDR certifications are also explained from their website in the following tables:

Why We Dislike the Display HDR 400 Certification

While this is a sensible idea to provide some uniformity of HDR in the desktop monitor market, we do have a few concerns. Our main concern lies at the lower entry-level end, with what we consider a very weak classification that is likely to encourage shoddy, misleading marketing from display manufacturers. Maybe it’s them who have pushed VESA for this entry level point, allowing them to market their screens as HDR certified to hop on the band-wagon of the hot topic right now? We have already seen a wide range of screens marketed as being “DisplayHDR 400” certified and there will be many more, suggesting to the consumer that there is support for HDR content and performance. The ill-informed will likely take this on face value, when in reality we don’t feel that this HDR 400 classification really offers anything in the way of a meaningful HDR performance and capability. In fact we don’t feel it really delivers much beyond what can be achieved from most displays available in the market already – even before the advent of HDR. We will explain….

If you look at the low end DisplayHDR 400 standards the requirement for a true 8-bit display is useful, but in the 27″ and above market IPS and VA panels are already meeting this requirement anyway. Many TN Film panels (where used in that size range) are also true 8-bit. To try and create an improved contrast ratio they have said the screen need only support Global Dimming. This operates by dimming and brightening the whole backlight in one go depending on the screen content, and is a different way of referring to an old ‘Dynamic Contrast Ratio’ technology. Yes, that can help produce some higher dynamic contrast ratios in practice, but DCR has largely fallen out of favour and has been around for many years. Many people don’t like it and this isn’t offering any real HDR benefit beyond what is possible already with DCR backlight systems. It is the local dimming ability of HDR displays which should be separating them from standard screens and which helps produce the HDR image with a more localised, finite control over the backlight in smaller zones. Without local dimming of any sort, we don’t feel a screen should be marketed as HDR to be quite honest. The peak luminance requirement is then only 400 cd/m2, again already available in some cases from older pre-HDR displays. Even though a lot of screens are typically 300 – 350 cd/m2 brightness nowadays, the small boost to 400 cd/m2 is not really creating much difference. It’s certainly nowhere near the higher peak luminance points that HDR10 and Dolby Vision (and other) content is mastered to. The spec table also talks about the contrast ratio requirements for these screens needing to be “at least 955:1″….hardly an achievement for most modern panels to be honest. Although if you refer to the “tunnel” specs this actually suggests the contrast ratio needs to be at least 4000:1. Finally they talk about colour gamut, and for DisplayHDR 400 they only require 95% of the ITU-R BT.709 colour space, basically 95% sRGB which again is the norm on nearly every display around now.

You can see why we have concerns about this entry level DisplayHDR 400 standard, as it could encourage wide-spread abuse of the HDR certification for displays which offer little to no real improvement or change over current models in the market. We would like to put the suggestion out there to VESA and those display manufacturers who are developing these schemes that the HDR 400 certification should be revamped, or perhaps even scrapped. It’s misleading to consumers we feel and one of those specs which really needs to disappear. The certifications were surely designed in the first place to help bring uniformity to the market and help consumers make good decisions if they want an HDR-capable display. This bottom tier goes against that.

The Other Levels Are Better

The DisplayHDR 500, 600 and 1000 certifications are better thankfully, and getting in to the realms of what we would consider good and meaningful HDR. DisplayHDR 600 requires a 600 cd/m2 peak luminance which is a decent step up from common displays and can provide an improved peak luminance for HDR content. They are also pushing colour depth requirement of the display to 8-bit+FRC (10-bit support), HDR contrast ratio requirements up to at least 6000:1, and importantly the 600 certification details local dimming implementation. Even if this is only edge lit local dimming, that’s still an improvement over “Global Dimming” from the HDR 400 level. The colour gamut requirements are also boosted to 90%+ of the DCI-P3 coverage, bringing it more in line with the standards used in the TV market and producing that colour boost that HDR is associated with. So models like the recently tested Samsung C32HG70 fall nicely in to this middle category.

Then at the top end the DisplayHDR 1000 certification pushes things a bit more and aligns closely with the Ultra HD Premium standards used in the TV market. A 1000 cd/m2 peak luminance is now needed, along with >20,000:1 HDR contrast ratio, 10-bit colour depth support (at least 8-bit+FRC) and 90%+ DCI-P3 colour space coverage. Again all requiring local dimming. We expect that most models that can reach these kind of peak luminance levels will need Full Array Local Dimming (FALD) backlights as well which adds another level of improvement, although it’s not a specific requirement in the certification scheme.

As another interesting note, the 600 and 1000 certifications also define a “black to white luminance response time”. This is not related to pixel response times in the traditional sense, but defines how fast the backlight should behave when going from a black image to a white image. i.e. how long it takes from going from minimum luminance of a dark HDR scene to the peak luminance of a white patch when it appears. This helps ensure there is no annoying lag with the image being dimmed and brightened at the wrong time or producing a lot of blooming behind moving objects. Interestingly they have defined this in exactly the same way as we introduced in our tests of the Dell UP2718Q – the first screen we tested with a FALD backlight. It measures the rise time using a 10% threshold from the dark screen to the peak luminance. VESA have stated that for the 600 and 1000 certifications the rise time should be 8 frames or less, but that they say that they expect in most cases it to be much lower. 8 frames on a 60Hz displays is around 133.33ms which is actually a lot less than we had seen on the UP2718Q which had very slow FALD operation with a rise time of around 624ms. At 100Hz the rise time has to be <80ms, and at 144Hz it will need to be <55.56ms. It will be interesting to see how many displays can conform to this. We have seen a much faster FALD implementation on the Asus ROG Swift PG27UQ gaming monitor thankfully, where rise times were as low as 10.5ms at the fastest FALD setting.

The VESA standards have also chosen to leave out requirements for resolution and aspect ratio which they feel are independent from the HDR experience. This is a good idea in our opinion for desktop monitors which come in all manner or resolutions, sizes and formats. We called this out actually in the original version of this article when we first published it – maybe someone has listened! Audio has also been left off as it is unrelated to HDR.

In addition, VESA is the first standards body to publicly develop a test tool for HDR qualification, utilizing a methodology for the tests listed below that end users can apply without having to invest in costly lab hardware. The DisplayHDR test tool is available from their website.

Conclusion

So to summarise as best we can, HDR as a technology is designed to offer more dynamic images and is underpinned by the requirement to offer improved contrast ratios beyond the limitations of existing panel technologies. This offers significant improvements in performance and represents a decent step change in display technology. There are various means of achieving this HDR support through the backlight dimming techniques available, although some are quite a lot more beneficial than others if implemented well. In the TV market, HDR has been around for a fair few years and is becoming more widely adopted with plenty of supporting game and movie content emerging. In the TV market, the manufacturers tend to also bundle in other spec areas when they talk about “HDR” for their screens. This includes typically higher than HD resolution (usually Ultra HD 3840 x 2160) and a wider colour space (typically around DCI-P3). Because there was so much widespread abuse of the term HDR emerging in the TV market, and so many different specs and standards being used, the Ultra HD alliance was set up to try and get a handle on things. They came up with their own ‘Ultra HD Premium’ certification which defines standards for HDR, colour performance, resolution and a few other areas in the TV market. This seems to be the Gold standard in the TV market for identifying a true HDR TV.

The desktop monitor market is far more recent in its adoption of HDR. From a content point of view, it’s still tricky to achieve from a PC, but certainly easier for external devices like Ultra HD Blu-ray players and modern games consoles. From a display point of view, things are less clear than the settling TV market and we’ve already seen a wide range of differing interpretations of HDR, and different specs being offered. Achieving a true HDR experience, as it’s been established now in the TV market seems confusing in the desktop monitor world right now. NVIDIA and AMD both have their own approach to standardising this, with NVIDIA’s “G-sync HDR” further along in specification at the moment, and seemingly adhering nicely to the existing Ultra HD Premium certification standards from the TV market. VESA have also introduced the DisplayHDR certification standards but we will likely be left in the same situation as the TV market for a while where different specs are being offered amongst the general (mis)use of the HDR term. Be careful when looking at monitors, and indeed TV’s, where HDR is being promoted, as not all HDR is the same.

Further Reading

Wikipedia – high dynamic range rendering

Flatpanelshd.com – more detailed look at HDR in the TV space

Anandtech – FreeSync 2

DisplayHDR.org – DisplayHDR Standards

| If you appreciate the article and enjoy reading and like our work, we would welcome a donation to the site to help us continue to make quality and detailed reviews and articles for you. |